SoE HPC Cluster

Announcements

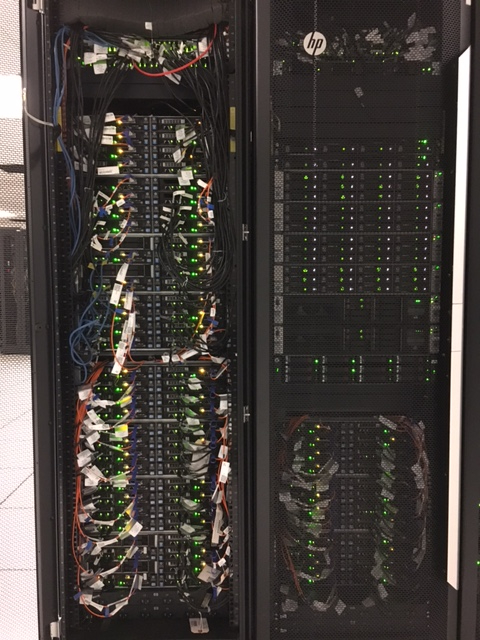

Hardware Architecture

|

The cluster hardware is based on combination of nodes with Intel Sandy Bridge 2670, Ivy Bridge 2670v2, Broadwell 2680v4, and AMD Epyc CPUs, and 128 GB of RAM per node for most of the nodes. There are 86 nodes in the cluster available for general use. There are also 60 nodes dedicated to several Engineering research groups. The nodes are interconnected over FDR 56Gbit infiniband and Gbit networks. Distributed cluster file system, BeeGFS, is available for parallel multi-node simulations. Each of the two interactive front-end machines have two Nvidia Kepler K20 GPU cards installed on each. |

|

Software Installed

At the moment, the following apps have been installed on the cluster for general use:

- Intel C/C++/Fortran compilers

- GNU GCC/G++/gfortran compilers

- Open MPI (compiled with GNU and Intel compilers)

- LAMMPS

- GROMACS

- Gaussian

- Matlab

- Comsol

- CUDA

Environment

The front end hosts and the storage server on the cluster can be accessed via SSH from the RU networks. See the details here.

The cluster resources are managed by SLURM queue system. Users will need to submit all their applications through batch scripts to run on the cluster. Here is a reference to the most common SLURM commands.

Computational jobs can run on the following general use compute nodes:

soenode[03-50] Sandy Bridge 2670, 16 CPU Cores per node, 128 GB of RAM;

soenode[75-82] Ivy Bridge 2670v2, 20 CPU Cores per node, 128 GB of RAM;

soenode[87-110] Broadwell 2680v4, 28 CPU Cores per node, 128 GB of RAM.

soenode[111-114] (Skylake) Gold 6132, 28 CPU Cores per node, 256 GB of RAM.

soeepyc[01] (AMD Epyc) 7702, 128 CPU Cores per node, 256 GB of RAM.

soeepyc[02] (AMD Epyc) 7452, 64 CPU Cores per node, 256 GB of RAM.

The environment for the applications, such as path to the binaries and shared libraries as well as the licenses, is loaded/unloaded through the environment modules. For example, to see what the modules are available on the cluster, run command module avail, to load a module, say matlab, run command module load matlab/2022a. More information on using the environment modules can be found at this link.

There are three file systems available for computations: local /tmp on the nodes, Lustre, and NFS. Both Lustre and NFS are shared file systems and provide the same file system image to all the computational nodes. If you run a serial or parallel computational job, utilizing only one node, always use the local /tmp file system. For multi-node MPI runs, you need a shared file system, so please use Lustre. Avoid using NFS as its I/O saturates quickly at multiple parallel runs and becomes extremely slow. For details on using the file systems on the cluster, follow this link

Account on the Cluster

Who is eligible for having an account: Engineering faculty, postdocs, graduate students

Account Requirements: EIT/DSV Account

Application for SOE HPC Cluster Account: http://ecs.rutgers.edu/soe_hpc_cluster_application (NOTE: Must be on a Rutgers-networked computer/VPN to access! ) Undergraduate and graduate students should have their faculty advisor to send a confirmation e-mail to Alexei about requested computing resources.

Those with accounts may access the SOE HPC cluster via SSH to soemaster1.hpc.rutgers.edu and soemaster2.hpc.rutgers.edu

ACCESS NOTE: The two aforementioned hosts and the application URL are only accessible from WITHIN Rutgers' networks, so you must be logged in on a Rutgers computer physically or remotely via SSH or VPN to access these hosts.