#!/bin/bash

#SBATCH --job-name=OMP_run

#SBATCH --time=2:15:0

#SBATCH --output=slurm.out

#SBATCH --error=slurm.err

#SBATCH --partition=SOE_main

#SBATCH --ntasks-per-node=16

myrun=for.x # define executable to run

export OMP_NUM_THREADS=$SLURM_JOB_CPUS_PER_NODE # assign the number of threads for OpenMP

MYHDIR=$SLURM_SUBMIT_DIR # directory with input/output files

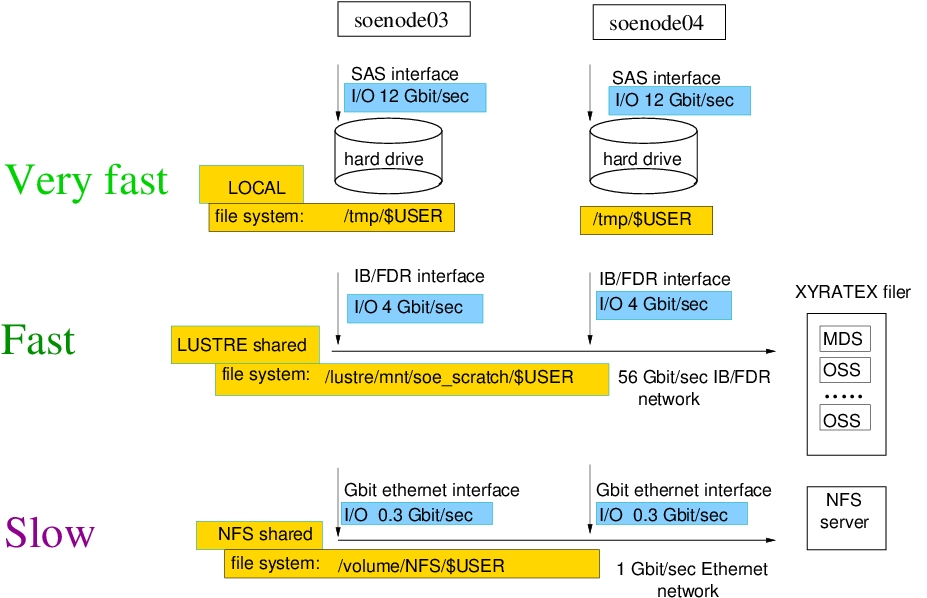

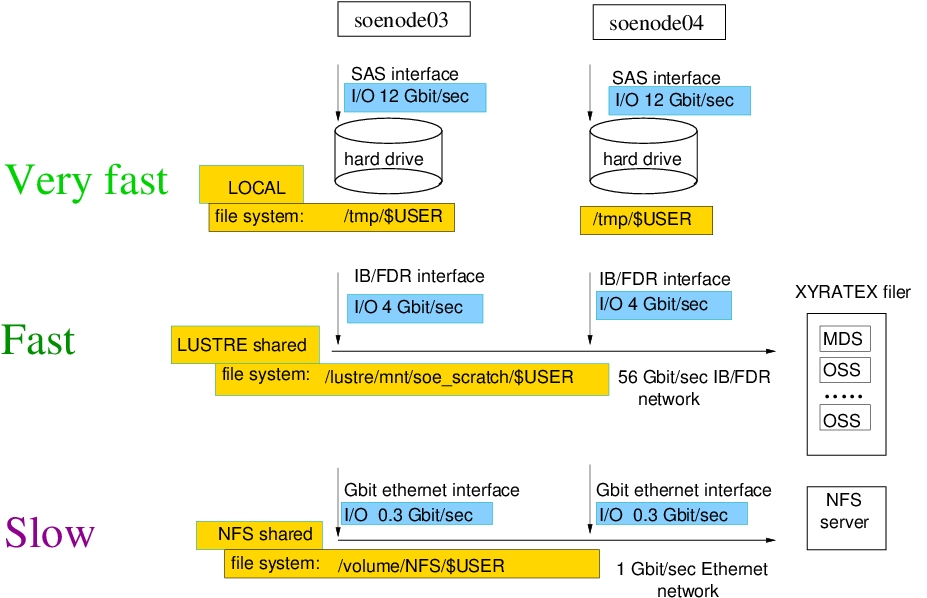

MYTMP="/tmp/$USER/$SLURM_JOB_ID" # local scratch directory on the node

mkdir -p $MYTMP # create scratch directory on the node

cp $MYHDIR/$myrun $MYTMP # copy the executable into the scratch

cp $MYHDIR/input1.dat $MYTMP # copy one input file into the scratch

cp $MYHDIR/input2.dat $MYTMP # copy another file into the scratch

# there may be more input files to copy

cd $MYTMP # run tasks in the scratch

./$myrun input1.dat input2.dat > run.out

cp $MYTMP/run.out $MYHDIR # copy the results data back into the home dir

rm -rf $MYTMP # remove scratch directory

|