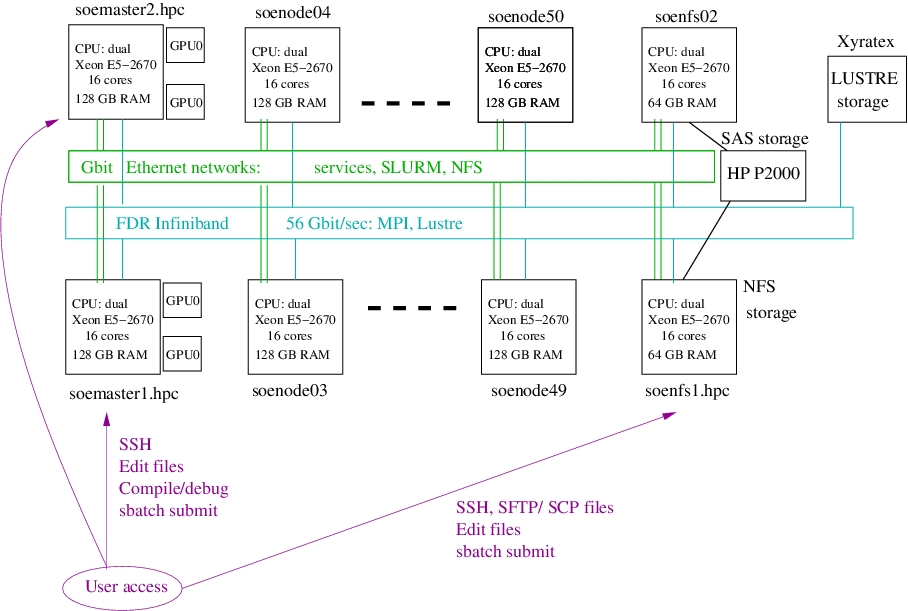

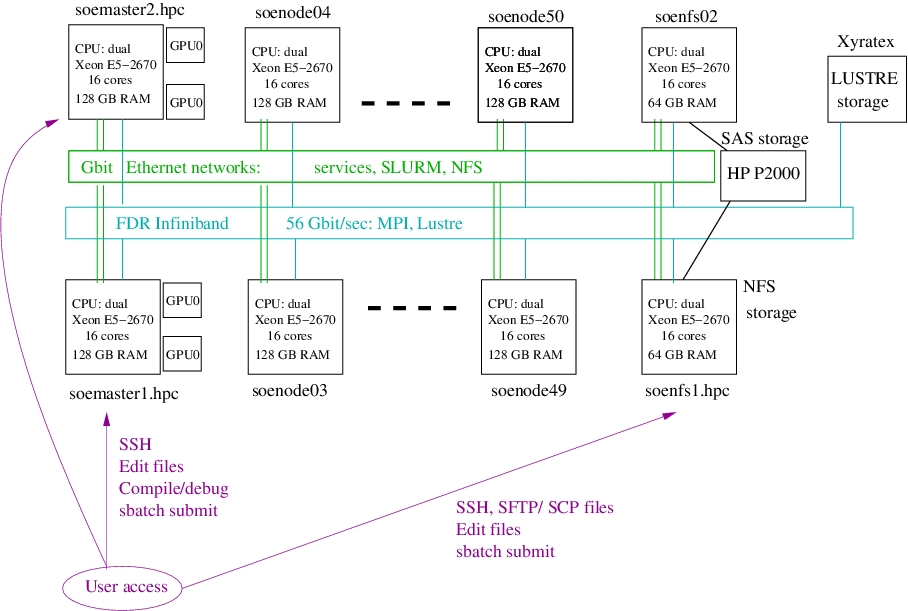

The cluster can be accessed via SSH to one of the front end hosts, soemaster1.hpc.rutgers.edu or soemaster2.hpc.rutgers.edu, with your Engineering user account, same as on DSV/EIT lab computers. Both soemaster1.hpc.rutgers.edu and soemaster2.hpc.rutgers.edu can be acessed from the RU networks only. On the front end hosts, you can develop, compile, and debug your code, then submit it to the queue system, SLURM. The queue system will dispatch the job to run on the compute nodes, soenode03, soenode04, etc when the resources become available.

For faster data file transfer to and from the cluster, it is recommended

to scp or sftp directly to the NFS file server,

soenfs1.hpc.rutgers.edu, bypassing the front end hosts. Your data will

be available to all the cluster computers through the shared NFS file system,

see the diagram below.

You can check your home directory quota usage by running command below on the

NFS server:

chkquota |

There are shared file systems available on all the cluster computers, NFS with home directories, and a scratch space on Lustre. There is also a local /tmp on each compute node. During the run time, please have your job to use either the local /tmp or the Lustre scratch directory for efficient I/O.

See the discussion on using the file systems on SOE cluster.